What you need to know to start working with Large Language Models in your business.

Large Language Models (LLMs) are redefining what it means to work with standardized communication in just about any form.

Chat-based support systems, quick summaries of large amounts of unstructured text, and automated generation of legal documents are just a few examples of the utility. LLMs offer vast possibilities for companies to work with their data in radically new ways.

At the same time many companies aren’t aware of their actual use cases for integrating LLMs. Perhaps they suspect there is potential, but they don’t know how, and they aren’t aware of the pitfalls and how to avoid them.

This blogpost aims to help you understand what LLMs can do, their limitations, and importantly, what needs to be done for a successful integration. We focus on GPT, the LLM “engine” behind OpenAI’s ChatGPT and the solution we generally work with.

What GPT does

Let’s start with the basics.

GPT (Generative Pre-trained Transformer) is an algorithm developed by OpenAI that transforms text. It understands language and semantics. You can provide it with some text, tell it to reason about it based on some criteria, and it will output a new text. It is considered a form of AI, but it is in essence a text transformation. What you know as ChatGPT is simply an interface for an instance of the GPT engine run by OpenAI.

A common task that GPT is well-equipped for is analyzing large amounts of text. With the right instructions, GPT can act as a subject matter expert who reads through all the material, takes notes, and creates a new document or presentation based on these notes.

The optimal way of implementing GPT very much depends on the concrete use case, and that is where the challenge lies for most companies. At Flowtale we understand how GPT works, and we can build a solution using it, but we don’t know the process it is meant to improve. Meanwhile, companies know their processes, but they don’t have the insight into how GPT works and how it can be applied to their use case.

So far, we have worked with two fundamental approaches.

- The generalist solution is a flexible and ChatGPT-like experience tailored to your company. We create the ability to “chat” with company data to get desired information quickly and easily.

- The narrow solution automates a concrete task, e.g. writing an insurance claim or a legal document. It takes an input, executes the same steps as a subject matter expert would, and outputs a text or document which lives up to pre-defined and very specific criteria.

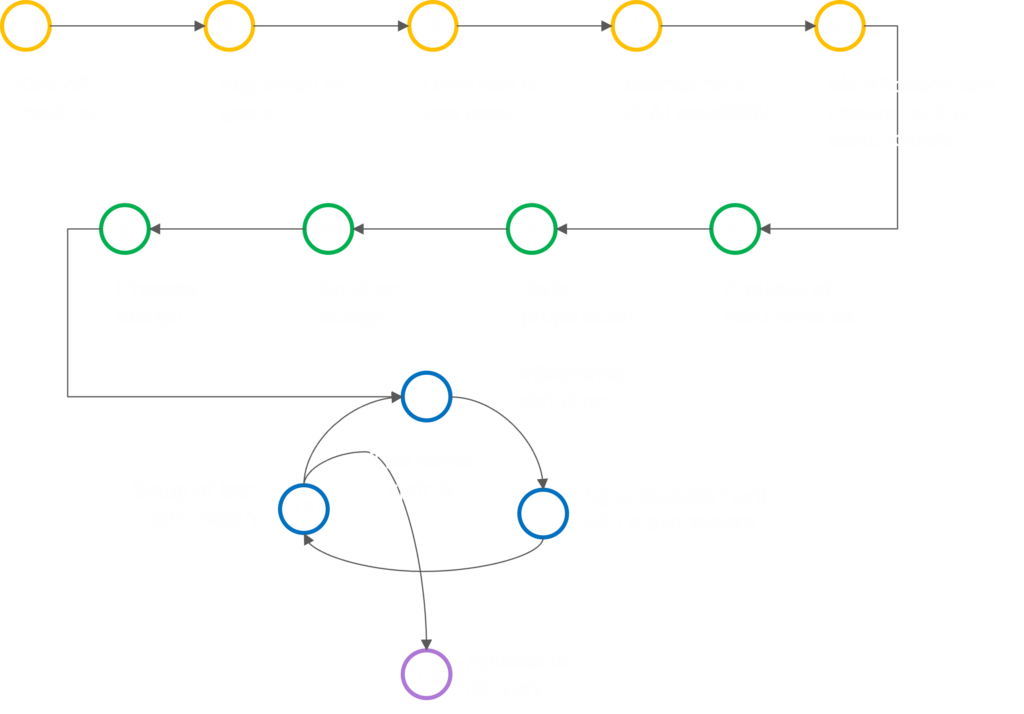

The GPT integration process. Our process for integrating GPT solutions. A well-structured process ensures faster delivery and a better end product.

The GPT integration process. Our process for integrating GPT solutions. A well-structured process ensures faster delivery and a better end product.

You won’t be training your own GPT

A common misconception about private GPT solutions is that we let it chew through a company’s data, and then it knows everything relevant, and then you have your personal GPT. It’s possible to do, but it’s not the right solution for most companies.

There are three main approaches to working with LLMs.

1. Training a new LLM from scratch. This is a massive undertaking, requiring machine learning experts, large amounts of carefully curated data, and massive data centers. Currently only large tech companies have the resources to develop these so-called foundation models. OpenAI’s GPT, Google’s Gemini, and Facebook’s LLaMA 2 are all foundation models, and they have been very well trained for a wide range of tasks covered by large amounts of carefully curated data.

2. Fine-tuning an existing model. This approach takes a foundation model, such as GPT, and continues its training based on the data you want. It is less demanding than training from scratch, but is still difficult, expensive and time-consuming. Also, there is a risk of introducing so-called catastrophic forgetting, where the model loses valuable and necessary generalist skills because the new training data displaces them. A bit like the cliché about the professor who becomes extremely specialized in a field, but loses the ability to have a normal conversation and navigate society. If you want to focus on the low-hanging fruits of integrating GPT, then fine-tuning is not the option to start with.

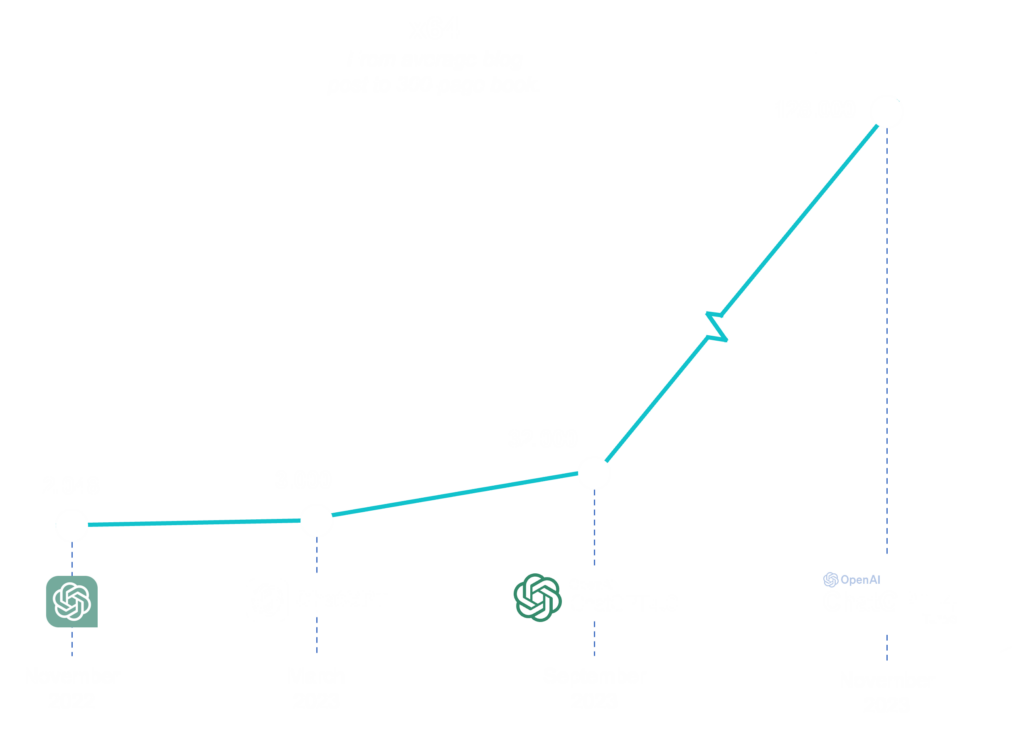

3. In-context learning. This is the approach mostly used by Flowtale. You use an existing foundation model in unchanged form, and you feed it the exact data it should consider, the context, along with every question you ask it. Together, the context and the question become the input to the model, also called the prompt. Instead of changing the “brain” fundamentally, you set up a system that automatically tells it what facts to consider when you ask it a question. Each version of GPT greatly improves the amount of context GPT can handle, meaning that the possibilities with this approach are rapidly expanding. And once you are up and running with an in-context learning solution, you are well prepared for potentially moving to a fine-tuning solution, as much of the data preparation is the same.

GPT’s abilities are growing exponentially. As of November 2023, GPT can take 128.000 “tokens” as context, equivalent to 300 standard text pages, and the number is rapidly increasing.

Garbage in, garbage out: Preparing the data

Whether you are aiming for a generalist or narrow solution, selecting the right data and preparing it correctly is crucial. Otherwise you will quickly be reminded of the classic phrase “garbage in, garbage out”. The system is only as good as the data you feed it, and often a company’s data simply isn’t ready for working with GPT.

Flowtale’s experts can help with this important first step. Before ChatGPT arrived, Flowtale specialized in managing data in the cloud, which involves the same management, processing and cleaning of data that is essential for creating a useful GPT setup.

To guarantee that your data is kept private, everything runs in a private cloud environment. Here we have a GPT engine installed along with the data that has been selected as context.

For a generalist solution, the company’s subject matter experts provide Flowtale with data sources they deem relevant for their work. We then populate databases and search indexes with the data, so it can be retrieved and used as context in a chat-like application, which is able to answer a variety of questions. We also set up processes to keep the data up to date.

For a narrow solution, the company’s subject matter experts hand-pick source data, typically documents, that are prime examples of the task being automated. Using GPT, the relevant information is extracted from these and turned into a condensed package of information, similar to if a subject matter expert had gone through the material and taken notes. The condensed examples are then input as context along with relevant data from the current case. GPT distills the information and generates a final document that matches the example material.

A fundamental difference between the two approaches is that the generalist solution offers more flexibility, but requires more expertise by the user to generate valuable outputs. Conversely, the narrow solution can only perform a narrowly defined task, but it has the expertise built into the system, as explained below.

Know your process: The art of prompt engineering

When building a narrow solution, an important part of the work is designing instructions for GPT, known as prompt engineering. While Flowtale has the technical expertise to build the GPT integration, good prompt engineering requires us to also understand the concrete business process in minute detail.

For that we need close and frequent interaction with the company’s subject matter experts, as the solution will always be based on their own process and their know-how.

We help the subject matter experts understand how prompt engineering works. In turn we need them to break down their process for us and explain the nitty gritty details of what is important in their work.

Every process is made up of many microtasks, and the subject matter experts often do them subconsciously without even realizing that some individual steps are important. We need to understand this thought process in detail, and we need to be able to express it in very simple terms before giving it to the GPT.

You can’t just put all the data in the context and expect to get useful results. We’ve tried it, and it doesn’t work. You have to handhold the GPT, and tell it “do this, then do that, and now do that”, ideally providing examples of each step.

Let’s say we want to produce a legal document. We need to understand what is important in the first paragraph, what is important in the second paragraph, how should the argument be structured, and so on. We then translate that information into specialized prompts, so the proper input along with relevant source documents will produce a useful output.

Any budget for integrating a GPT solution needs to include allocation of time for the subject matter experts to experiment with the solution and interact with us. The more interaction, the better the end result.

Now is a good time to start

The benefit of integrating LLM’s into the workflow isn’t just about optimizing business processes. It’s like learning how to use search engines. If companies don’t start educating the workforce on how to work with these new tools, they are missing out on a great opportunity.

It’s understandable that some may think they should wait because the technology is moving too fast, and that they should instead get in when things have settled into more established tools. But there’s no benefit in waiting until the “golden tool” comes out.

Working with LLMs is about change management, and we all have to get used to interfacing with computers through a chat. Just like we had to learn to use search engines 25 years ago. Don’t be surprised if within 1-3 years major applications like Microsoft Office have built-in GPT functionality.

Lastly, our solutions are modular, and parts can be upgraded without rebuilding everything. So when the next GPT version comes out, you don’t have to throw away your system, because you simply replace the chat engine with the new and better version.

We hope this blog post has given you a better feel for what GPT is and how it works. Reach out to learn more, and together we’ll explore what GPT can do for your business.